Perplexica: 28K Stars for Privacy-First AI Search

Perplexica went from zero to 28,000 GitHub stars since April 2024 by offering what Perplexity AI won't: a fully self-hosted, privacy-first AI search engine. The project demands Docker expertise and homelab patience, yet thousands of developers choose configuration headaches over sending their queries to the cloud. One developer's MIT-licensed answer to proprietary AI search proves data sovereignty trumps polish.

Perplexica went from zero to over 27,600 GitHub stars since launching in April 2024. The open-source AI search engine, built by a solo developer, offers what its proprietary inspiration doesn't: control over where your queries go and who sees them.

The project mirrors Perplexity AI's core experience—AI-synthesized answers with cited sources—but runs on hardware you control. Users willing to handle Docker networking and Ollama configuration choose deployment complexity over sending searches to someone else's cloud.

From Zero to 28K Stars: The Momentum Behind Self-Hosted AI Search

The numbers tell the story. GitHub activity shows the repository hit 5,414 stars in May 2024, then climbed past 27,600 over the following months. Hacker News threads pushed the project to front pages multiple times. YouTube tutorials appeared throughout mid-2024, with creators framing Perplexica as a "100% local Perplexity clone" that sidesteps subscription costs.

This isn't a single viral moment. External analysis points to frequent commits, ongoing feature refinement, and sustained community engagement.

What Perplexica Actually Does (And Why It Mirrors Perplexity)

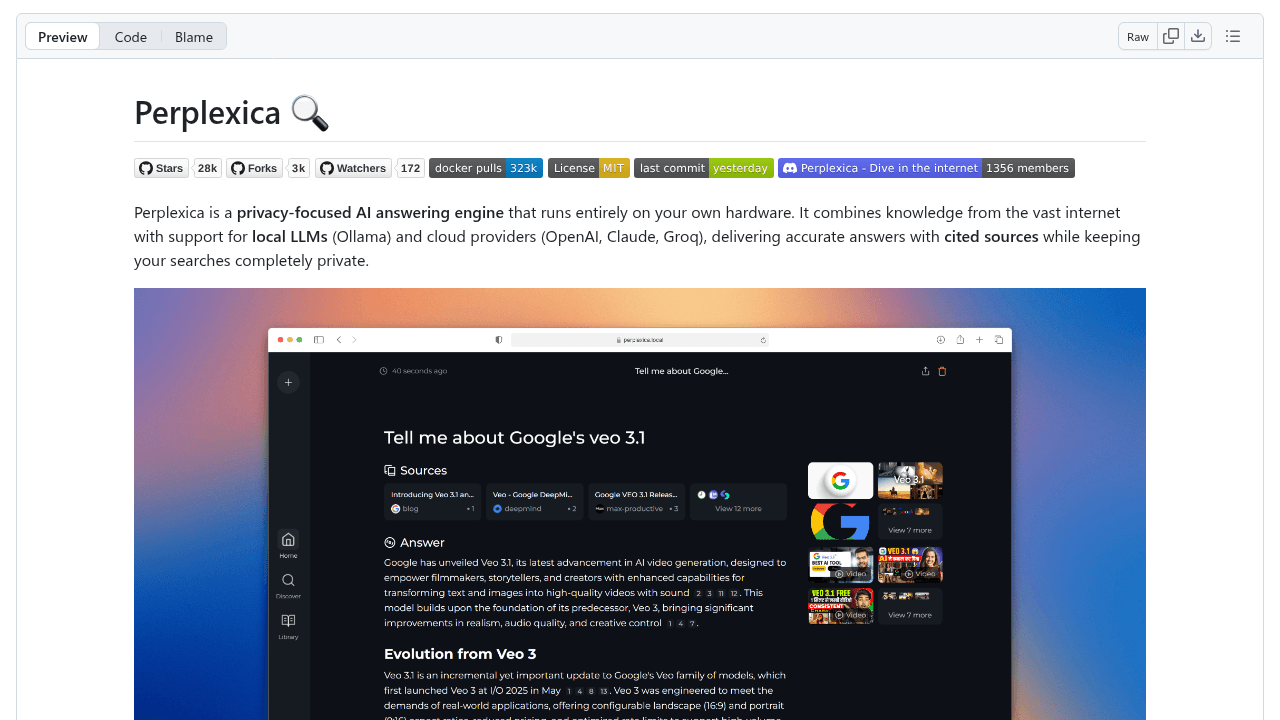

The technical foundation: SearxNG-powered meta-search combined with LLM synthesis and cited sources, packaged for self-hosting. The system supports multiple research modes—web, academic papers, YouTube, Reddit, Wolfram Alpha, and a writing assistant—using similarity search and embeddings to surface relevant context.

You can run it with local LLMs via Ollama or major cloud providers like OpenAI, Claude, Gemini, and Groq. The bundled Docker setup includes SearxNG and serves a web UI on localhost:3000. It's Perplexity's feature set, rebuilt as MIT-licensed infrastructure you deploy yourself.

The Privacy Pitch: Why Users Choose Deployment Pain Over Cloud Convenience

One user called Perplexica "the best project I've found using local models" and described using it "as a casual user and as a researcher." That captures the trade-off: accept configuration headaches in exchange for full data control.

The contrast with Perplexity AI is deliberate. Hacker News discussions about "local Perplexity alternatives" frame the choice around privacy, not result quality. Perplexica's MIT license and local LLM support stand against Perplexity's subscription model and closed infrastructure.

The deployment complexity—Docker, Ollama configuration sensitivity, ensuring port 11434 isn't blocked—is the price of sovereignty.

The Deployment Reality: Docker Fragility and Homelab Tolerance

The GitHub issue tracker shows the downside. Users report "Perplexica/Ollama/SearXNG connection issues" where searches return no data and the Discover tab fails. Backend connection errors emerge when Docker networking is misconfigured. Analysis of deployment patterns highlights recurring complications around network configurations.

One user noted that lack of access control prevents safely exposing the instance beyond their LAN. This is infrastructure for technical users running Proxmox homelabs, not point-and-click software.

Who's Actually Using This (And How)

Community reports describe homelab operators splitting services across Docker instances—SearxNG, Ollama, and Perplexica—exposed across LANs via nginx. Researchers combine local Llama models with cloud GPT-4 fallback for different query types.

No named enterprises or major open-source projects appear in public documentation or the issue tracker. This is individual developer and hobbyist territory.

Can One Developer Sustain This Against a Funded Competitor?

Perplexica competes directly with Perplexity AI and shares space with other open-source alternatives like MiniPerplx and Morphic. It differentiates by bundling SearxNG, supporting multiple AI providers, and offering multi-focus modes in a single self-hostable package.

The 27,600 stars suggest users will choose freedom over polish. Whether one maintainer's frequent commits and community pull requests can match enterprise development velocity remains the open question. The momentum is real. Sustainability is unproven.

ItzCrazyKns/Perplexica

Perplexica is an AI-powered answering engine. It is an Open source alternative to Perplexity AI