OpenCode Hit 650K Users While Big Tech Changed the Rules

Anthropic's API restrictions turned OpenCode from another AI coding tool into a developer rebellion. 650,000 monthly users, 500+ contributors, and serious technical trade-offs—CPU abuse, memory leaks, and an architecture that actually works with any LLM provider. The metrics show momentum, but the controversies reveal what it costs to escape vendor lock-in.

When Anthropic restricted third-party CLI access to Claude in October, developers faced a choice: pay API rates five times higher than subscription pricing, or switch tools. OpenCode gained 2,000 GitHub stars in 24 hours.

Turns out open source looks less like ideology and more like insurance when vendors change pricing overnight.

650,000 Developers Chose Provider Independence

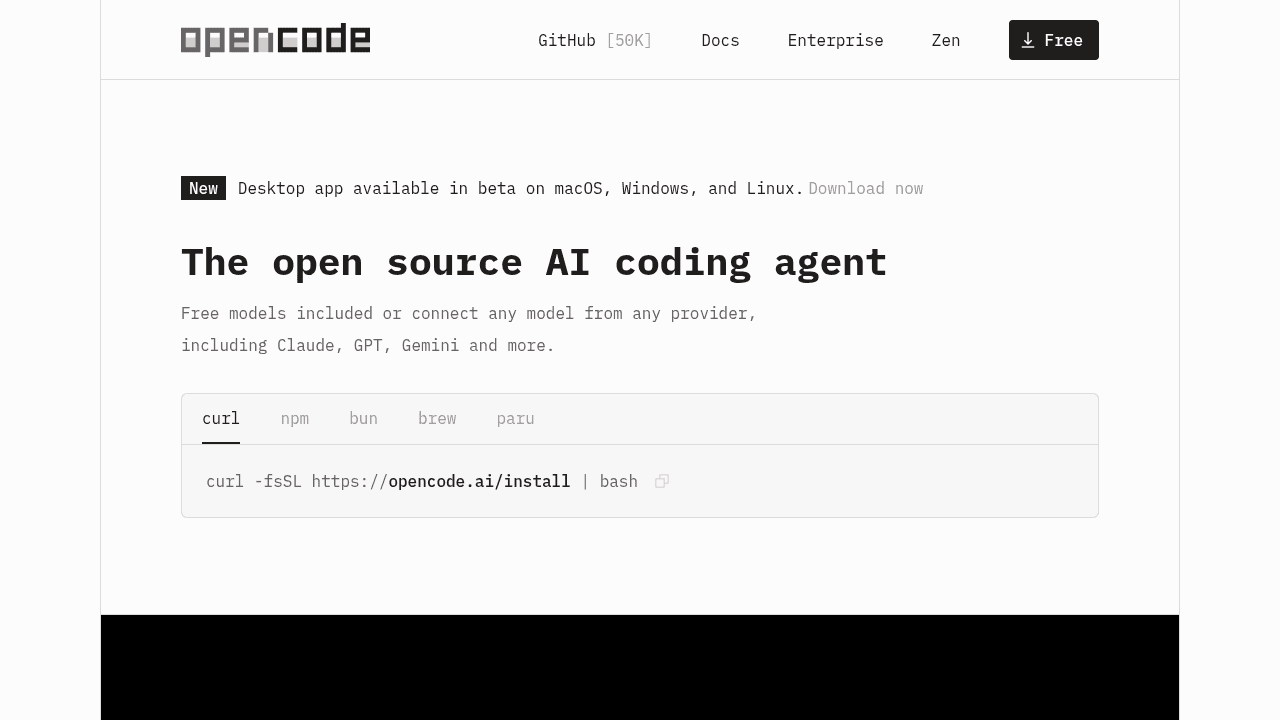

OpenCode now serves over 650,000 developers monthly, built on an architecture that connects to Claude, OpenAI, Google, or local Ollama models. The technical difference is architectural, not philosophical.

The tool implements a client/server model supporting multiple frontends: terminal UI, web interface, desktop app. It ships with LSP (Language Server Protocol) support, meaning the agent catches compilation failures immediately rather than generating code that breaks silently.

For teams handling code that can't touch cloud services, or engineering leaders tired of re-training teams every time a vendor adjusts pricing, the 500+ contributors and 50,000+ stars suggest this is production-ready.

The Performance Issues Are Public

The GitHub issues tell the full story. The TUI spawns Erlang processes consuming 685% CPU on 16-core machines, driving system load past 50. Single sessions leak memory into 23GB territory. Text rendering hits 200-300% CPU during output.

These aren't edge cases—they're active complaints from production users. The difference? Issues are public, assigned, and being patched by contributors instead of sitting in vendor support queues.

The trade-off: performance problems in exchange for control over your tooling stack and provider flexibility. For startups optimizing for shipping velocity, that's a bad deal. For infrastructure teams at regulated enterprises where vendor lock-in compounds over years, the calculation changes.

The Governance Context

The repository carries organizational history worth understanding: when original creator Kujtim Hoxha joined Charm, other contributors forked the project and retained the OpenCode name and domain. The community continued development under new maintainers.

For engineers evaluating project stability, the signal is 6,500+ commits and accelerating contributor growth post-fork, not the organizational change.

What Control Costs

AI coding agents moved from early adopter experiments to mainstream engineering workflows in 2025. Anthropic's API restrictions revealed the dependency risk.

OpenCode's growth answers a specific question: what does sovereignty cost in AI tooling? The answer involves CPU overhead, memory management, and UI lag. It also means not re-negotiating contracts when vendors change business models, not migrating codebases when your LLM provider shifts pricing, and not explaining to security why tools need repository access.

The architecture proves you can build AI coding agents without platform lock-in. The usage numbers prove developers with budget authority are making that calculation. The technical issues prove this isn't finished—just functional enough that 650,000 developers decided the control was worth the trade-offs.