Daytona: AI Agents Need Execution Sandboxes, Not Just IDEs

AI agents generate code faster than traditional infrastructure can safely execute it. Daytona emerged in February 2024 as container-based execution infrastructure, hitting 49K GitHub stars in 14 months by solving the isolation bottleneck in agentic workflows. Container speed versus VM security, streaming gaps, and the exploding sandbox category define the 2025 stack decisions.

AI agents generate thousands of lines of code in seconds. Running that code safely without compromising your infrastructure takes infrastructure most teams don't have yet. That gap explains why Daytona hit 27,100 GitHub stars in fourteen months and why container-based execution sandboxes became the infrastructure category no one saw coming in 2024.

The Execution Bottleneck: When Code Generation Outpaces Safe Infrastructure

The shift from AI coding assistants to autonomous agents created a new infrastructure problem. Copilot suggests lines; agents write entire functions, execute shell commands, and iterate on their own output. Running untrusted AI-generated code requires isolation that prevents filesystem access, network exploits, and resource exhaustion—but traditional VMs add seconds of overhead to workflows where agents expect millisecond responses.

Daytona's architecture uses containers instead of VMs. That choice prioritizes speed over kernel-level isolation, trading paranoid security for the performance AI workflows demand. It's the same calculation that separated Docker from traditional virtualization a decade ago, now applied to the agent execution layer.

Why Containers Won Speed: Sub-90ms vs Multi-Second VM Spin-Up

Container-based sandboxes launch in under 90 milliseconds, fast enough for agents to treat execution as synchronous rather than queued. Daytona wraps this in development environment infrastructure—Git integration, language server protocol support, unlimited session persistence, and Docker/OCI compatibility that lets teams use existing images without rewriting toolchains.

The alternative approach—microVMs like e2b's Firecracker-based isolation—adds kernel-level security at the cost of startup latency and image compatibility. Microsandbox chose traditional VMs, accepting multi-second spin-up times for workloads where isolation matters more than iteration speed. Modal and others time-limit runtimes to control resource sprawl, while Daytona allows indefinite persistence for long-running agent sessions.

These aren't better or worse—they're architectural answers to different threat models. If your agent writes Kubernetes manifests, containers work. If it's compiling untrusted kernel modules, you need VMs.

The 49K Star Trajectory: Timing the Agent Infrastructure Wave

Daytona launched in February 2024, the same quarter OpenAI released GPT-4 Turbo with function calling and Anthropic introduced Claude 3's tool use. By year's end, it became the #1 open-source cloud development environment with 13,000+ stars. Another four months added 36,000 more as AI startups and enterprises moved agent architectures to production.

This isn't one project finding product-market fit. It's a category explosion. E2b, microsandbox, and Modal all launched within months of each other, each funded, each attacking the same bottleneck with different isolation primitives. The competition validates the problem more than any individual solution.

Production Gaps: Streaming, GPU, and Session Ergonomics

Container speed comes with container limitations. Daytona doesn't support GPU workloads, blocking ML training or inference tasks. Image builds run slowly when agents iterate on dependencies. Streaming and interactive outputs lag, frustrating workflows that need real-time feedback. Some users report intermittent JetBrains Gateway SSH worker failures on sandbox connections.

These aren't Daytona-specific shortcomings—they're the rough edges of a category still figuring out its primitives. In 2015, Docker didn't have multi-stage builds or BuildKit. In 2025, agent sandboxes don't have reliable GPU passthrough or sub-second image rebuilds.

Stack Decisions for 2025: When to Choose Container Isolation

Choose container-based sandboxes for CPU-bound agent tasks where iteration speed matters more than kernel-level isolation—code generation, testing, data transformation. Daytona's SDK supports eval pipelines and AI-assisted coding workflows where sub-second feedback loops determine agent effectiveness.

Choose microVM solutions when agents handle sensitive data, compile binaries, or operate in regulated environments where container escape vulnerabilities create compliance risk. The architectural trade-off isn't going away—it's the infrastructure decision every team building agent systems will make in 2026.

daytonaio/daytona

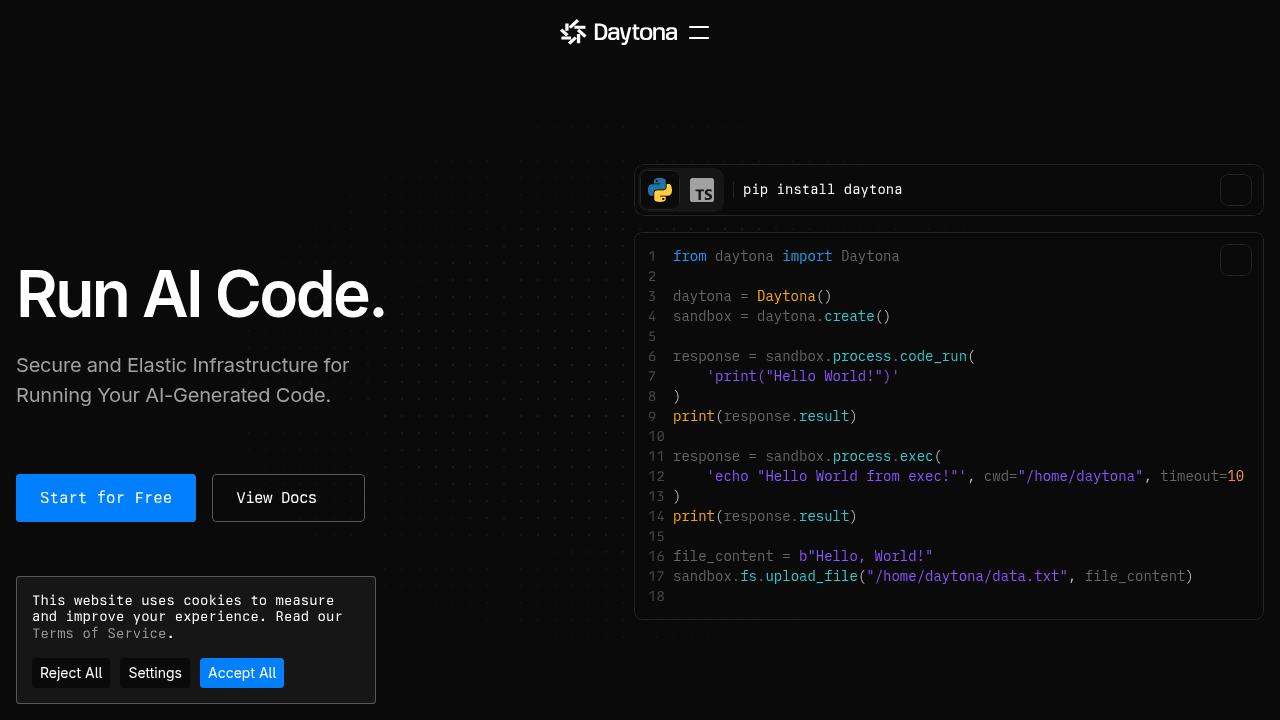

Daytona is a Secure and Elastic Infrastructure for Running AI-Generated Code